Introduction: Java Cache

From Resin 4.0 Wiki

| Line 10: | Line 10: | ||

= Cache Performance Benefits = | = Cache Performance Benefits = | ||

| − | + | [[Image:ideal-cache.png|right]] | |

Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple | Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple | ||

test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any | test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any | ||

| Line 21: | Line 21: | ||

and then to get useful data, the call to "doStuff" is repeated 300k times and compared with the direct call to "doLongCalculation" 300k times. | and then to get useful data, the call to "doStuff" is repeated 300k times and compared with the direct call to "doLongCalculation" 300k times. | ||

| − | Although the change is realistic (100x), this is also an | + | Although the change is realistic (the 100x is a measured result with Resin Cache), this is also an ideal situation, where the item is always in cache. In other words it's an actual cache with a 0% miss ratio. |

| − | + | ||

| − | + | ||

<table border='1'> | <table border='1'> | ||

| Line 48: | Line 46: | ||

Even this simple test shows how caches can win. In this simple benchmark, the performance is significantly faster and saves the database load. | Even this simple test shows how caches can win. In this simple benchmark, the performance is significantly faster and saves the database load. | ||

| − | * | + | * 100x faster |

* Remove Mysql load | * Remove Mysql load | ||

| Line 54: | Line 52: | ||

but real benchmarks require testing against your own application, in combination with profiling. For example, Resin's simple profiling capabilities | but real benchmarks require testing against your own application, in combination with profiling. For example, Resin's simple profiling capabilities | ||

in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance. | in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance. | ||

| − | + | <br style="clear:both" /> | |

= Improving Cache Performance = | = Improving Cache Performance = | ||

| + | == Cache Performance Equation == | ||

t = p_miss * t_miss + (1 - p_miss) * t_hit | t = p_miss * t_miss + (1 - p_miss) * t_hit | ||

| Line 67: | Line 66: | ||

== 20% miss, 100ms miss time, 1ms hit time == | == 20% miss, 100ms miss time, 1ms hit time == | ||

| − | [[File:Cache-20-miss-changes.png]] | + | [[File:Cache-20-miss-changes.png|right]] |

| + | |||

| + | If your cache might have a fairly-high 20% miss rate, it might already improve your performance by 5x. Even a terrible miss ratio of 50% can improve | ||

| + | performance by a factor of 2x. And this might be good enough for you, because the 80/20 rule always applies. If improving that database performance | ||

| + | by a factor of 5x is good enough, then you can move on to improving a different performance problem. | ||

| + | |||

| + | But suppose you do need better performance than the 5x improvement. What changes will help? After all, there's no sense spending time trying to | ||

| + | improve something that doesn't matter. We can take the basic cache performance equation and try some experiments: | ||

| + | |||

| + | * improve the cache implementation (by asking Caucho to speed up Resin Cache) | ||

| + | * improve the miss ratio (typically be increasing the cache size, but possibly refactoring) | ||

| + | * improve the miss time (by speeding up the database code, optimizing queries, etc.) | ||

| + | |||

| + | For each experiment, we'll see what happens if we can improve by a factor of 2: improving the miss ratio from 20% to 10%, | ||

| + | speeding Resin Cache from 1ms to 0.5ms, | ||

| + | and improving the database time from 100ms to 50ms. | ||

<table border="1"> | <table border="1"> | ||

| Line 96: | Line 110: | ||

</table> | </table> | ||

| + | <b>Speeding the cache implementation: doesn't help much.</b> Even if the Caucho engineers sped up the Resin Cache by 50% in this scenario, | ||

| + | your performance wouldn't improve by much, because the scenario is still dominated by the database miss time and the miss rate. See the next scenario (with a great 1% miss ratio) | ||

| + | for a case where the cache performance matters more. | ||

| + | |||

| + | <b>Improving the miss ratio from 20% to 10%: a big help, 2x speed up.</b> Every extra improvement in the miss ratio helps this scenario. This might mean increasing the cache memory or disk size, | ||

| + | or extending the expire times. | ||

| + | |||

| + | <b>Improving the database miss time from 100ms to 50ms: a big help, again 2x</b>. Even when your caching, improving the miss time, the database time or RPC service time, will help your performance | ||

| + | when you have a reasonably high miss ratio like 20%. So caching helps, but you still want to write good code behind the cache. | ||

| + | |||

| + | <br style="clear:both" /> | ||

== 1% miss, 100ms miss time, 1ms hit time == | == 1% miss, 100ms miss time, 1ms hit time == | ||

| − | [[File:Cache-1-miss-changes.png]] | + | [[File:Cache-1-miss-changes.png|right]] |

<table border="1"> | <table border="1"> | ||

| Line 126: | Line 151: | ||

</tr> | </tr> | ||

</table> | </table> | ||

| + | <br style="clear:both" /> | ||

| + | == 20% miss, 2ms miss time, 1ms hit time == | ||

| + | |||

| + | [[File:Cache-1-miss-changes.png|right]] | ||

| + | |||

| + | <table border="1"> | ||

| + | <tr> | ||

| + | <th>Change</th> | ||

| + | <th>Performance</th> | ||

| + | </tr> | ||

| + | <tr> | ||

| + | <th>baseline</th> | ||

| + | <td>1.2ms</td> | ||

| + | </tr> | ||

| + | <tr> | ||

| + | <th>0.5ms hit time</th> | ||

| + | <td>0.8ms</td> | ||

| + | </tr> | ||

| + | <tr> | ||

| + | <th>10% miss rate</th> | ||

| + | <td>1.1ms</td> | ||

| + | <tr> | ||

| + | <tr> | ||

| + | <th>1ms miss time</th> | ||

| + | <td>1ms</td> | ||

| + | </tr> | ||

| + | <tr> | ||

| + | <th>disable cache</th> | ||

| + | <td>2ms</td> | ||

| + | </tr> | ||

| + | </table> | ||

| + | <br style="clear:both" /> | ||

| + | = Cache Performance: Improving the Miss Ratio = | ||

| + | |||

| + | As the previous experiments showed, improving the miss ratio is often the best way to improve your cache performance, because | ||

| + | the more requests hit the cache, the more requests can be served quickly. There are a few general ways of improving the miss ratio, including: | ||

| + | |||

| + | * increase the cache size so more items are in the cache | ||

| + | * increase the expire time so items are valid more often | ||

| + | * update the cache with new values instead of invalidating | ||

| + | |||

| + | == Fitting in the Working Set: Cache Size == | ||

| + | |||

| + | |||

| + | [[File:Cache-hit-graph.png|right]] | ||

| + | |||

| + | Increasing the cache size will often improve cache performance by improving the miss ratio, but once your working set is in the cache, | ||

| + | further cache size increases will only give you minimal improvement. Some reasons that increasing the cache size might not improve performance: | ||

| + | |||

| + | * the working set is already in the cache | ||

| + | * the items are expiring before they're reused | ||

| + | * the items are changing before they're reused | ||

| − | + | The working set are the items that are most often used. The distribution of the working set varies greatly between applications. Some applications have a | |

| + | small working set: everyone looks at the same top 10 items in a catalog. Some applications has a large or flat working set: each social networking page is | ||

| + | specific to a large number of users. | ||

| − | + | When the cache is smaller than the working set, increasing the cache size helps performance considerably. If you only fit 5 of your 10 catalog items in the cache, | |

| + | increasing the cache size to 10 might improve performance almost 50%. But if your 10 items are already in the cache, even doubling the cache size might only | ||

| + | give you a small gain. | ||

| + | A large working set can fit into a cache if the cache is very large. For example, Resin's cache will save cache data to disk letting you store 100G of data or more. With that size, | ||

| + | you cache becomes more like a persistent store than a cache, and for some applications, that's exactly what's needed. | ||

= The Resin ClusterCache implementation = | = The Resin ClusterCache implementation = | ||

Since Resin's ClusterCache is a persistent cache, the entries you save will be stored to disk and recovered. This means you can store lots of data in the cache without worrying about running out of memory. (LocalCache is also a persistent cache.) If the memory becomes full, Resin will use the cache entries that are on disk. For performance, commonly-used items will remain in memory. | Since Resin's ClusterCache is a persistent cache, the entries you save will be stored to disk and recovered. This means you can store lots of data in the cache without worrying about running out of memory. (LocalCache is also a persistent cache.) If the memory becomes full, Resin will use the cache entries that are on disk. For performance, commonly-used items will remain in memory. | ||

Latest revision as of 02:17, 18 October 2013

Faster application performance is possible with Java caching by saving the results of long calculations and reducing database load. The Java caching API is being standardized with jcache. In combination with Java Dependency Injection (CDI), you can use caching in a completely standard fashion in the Resin Application Server. You'll typically want to look at caching when your application starts slowing down, or your database or other expensive resource starts getting overloaded. Caching is useful when you want to:

- Improve latency

- Reduce database load

- Reduce CPU use

Contents |

Cache Performance Benefits

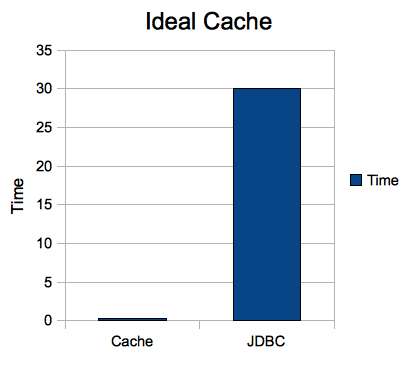

Since reducing database load is a typical cache benefit, it's useful to create a micro-benchmark to see how a cache can help. This is just a simple test with mysql running on the same server and a trivial query. In other words, it's not trying to exaggerate the value of the cache, because almost any real cache use will have a longer "doLongCalculation" than this simple example, and therefore the cache will benefit even more.

The micro-benchmark has a simple jdbc query in the "doLongCalculation" method

"SELECT value FROM test WHERE id=?"

and then to get useful data, the call to "doStuff" is repeated 300k times and compared with the direct call to "doLongCalculation" 300k times.

Although the change is realistic (the 100x is a measured result with Resin Cache), this is also an ideal situation, where the item is always in cache. In other words it's an actual cache with a 0% miss ratio.

| Type | Time | requests per millisecond | Mysql CPU |

|---|---|---|---|

| JDBC | 30s | 10.0 req/ms | 35% |

| Cache | 0.3s | 1095 req/ms | 0% |

Even this simple test shows how caches can win. In this simple benchmark, the performance is significantly faster and saves the database load.

- 100x faster

- Remove Mysql load

To get more realistic numbers, you'll need to benchmark the difference on a full application. Micro-benchmarks like this are useful to explain concepts,

but real benchmarks require testing against your own application, in combination with profiling. For example, Resin's simple profiling capabilities

in the /resin-admin or with the pdf-report can get you quick and simple data in your application performance.

Improving Cache Performance

Cache Performance Equation

t = p_miss * t_miss + (1 - p_miss) * t_hit where t is the total time p_miss is the miss rate t_miss is the time taken for a miss (e.g. database time) t_hit is the time taken for a hit (cache implementation overhead)

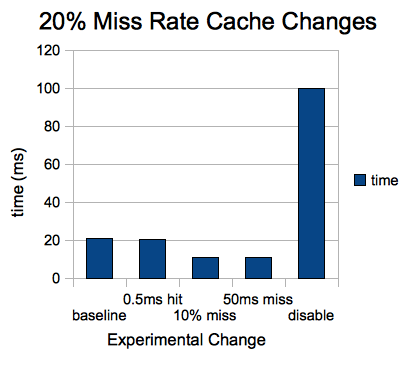

20% miss, 100ms miss time, 1ms hit time

If your cache might have a fairly-high 20% miss rate, it might already improve your performance by 5x. Even a terrible miss ratio of 50% can improve performance by a factor of 2x. And this might be good enough for you, because the 80/20 rule always applies. If improving that database performance by a factor of 5x is good enough, then you can move on to improving a different performance problem.

But suppose you do need better performance than the 5x improvement. What changes will help? After all, there's no sense spending time trying to improve something that doesn't matter. We can take the basic cache performance equation and try some experiments:

- improve the cache implementation (by asking Caucho to speed up Resin Cache)

- improve the miss ratio (typically be increasing the cache size, but possibly refactoring)

- improve the miss time (by speeding up the database code, optimizing queries, etc.)

For each experiment, we'll see what happens if we can improve by a factor of 2: improving the miss ratio from 20% to 10%, speeding Resin Cache from 1ms to 0.5ms, and improving the database time from 100ms to 50ms.

| Change | Performance |

|---|---|

| no change | 20.8ms |

| 0.5ms hit time | 20.4ms |

| 10% miss rate | 10.9ms |

| 50ms miss time | 10.8ms |

| disable cache | 100ms |

Speeding the cache implementation: doesn't help much. Even if the Caucho engineers sped up the Resin Cache by 50% in this scenario, your performance wouldn't improve by much, because the scenario is still dominated by the database miss time and the miss rate. See the next scenario (with a great 1% miss ratio) for a case where the cache performance matters more.

Improving the miss ratio from 20% to 10%: a big help, 2x speed up. Every extra improvement in the miss ratio helps this scenario. This might mean increasing the cache memory or disk size, or extending the expire times.

Improving the database miss time from 100ms to 50ms: a big help, again 2x. Even when your caching, improving the miss time, the database time or RPC service time, will help your performance when you have a reasonably high miss ratio like 20%. So caching helps, but you still want to write good code behind the cache.

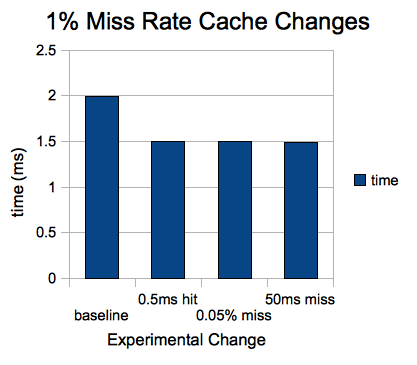

1% miss, 100ms miss time, 1ms hit time

| Change | Performance |

|---|---|

| no change | 1.99ms |

| 0.5ms hit time | 1.5ms |

| 0.05% miss rate | 1.5ms |

| 50ms miss time | 1.49ms |

| disable cache | 100ms |

20% miss, 2ms miss time, 1ms hit time

| Change | Performance |

|---|---|

| baseline | 1.2ms |

| 0.5ms hit time | 0.8ms |

| 10% miss rate | 1.1ms |

| 1ms miss time | 1ms |

| disable cache | 2ms |

Cache Performance: Improving the Miss Ratio

As the previous experiments showed, improving the miss ratio is often the best way to improve your cache performance, because the more requests hit the cache, the more requests can be served quickly. There are a few general ways of improving the miss ratio, including:

- increase the cache size so more items are in the cache

- increase the expire time so items are valid more often

- update the cache with new values instead of invalidating

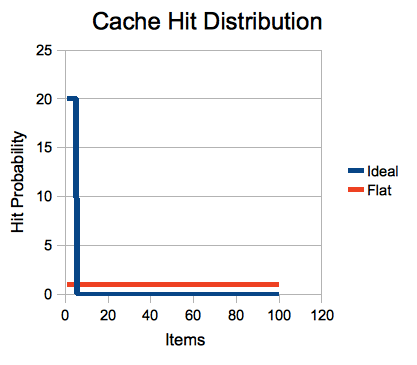

Fitting in the Working Set: Cache Size

Increasing the cache size will often improve cache performance by improving the miss ratio, but once your working set is in the cache, further cache size increases will only give you minimal improvement. Some reasons that increasing the cache size might not improve performance:

- the working set is already in the cache

- the items are expiring before they're reused

- the items are changing before they're reused

The working set are the items that are most often used. The distribution of the working set varies greatly between applications. Some applications have a small working set: everyone looks at the same top 10 items in a catalog. Some applications has a large or flat working set: each social networking page is specific to a large number of users.

When the cache is smaller than the working set, increasing the cache size helps performance considerably. If you only fit 5 of your 10 catalog items in the cache, increasing the cache size to 10 might improve performance almost 50%. But if your 10 items are already in the cache, even doubling the cache size might only give you a small gain.

A large working set can fit into a cache if the cache is very large. For example, Resin's cache will save cache data to disk letting you store 100G of data or more. With that size, you cache becomes more like a persistent store than a cache, and for some applications, that's exactly what's needed.

The Resin ClusterCache implementation

Since Resin's ClusterCache is a persistent cache, the entries you save will be stored to disk and recovered. This means you can store lots of data in the cache without worrying about running out of memory. (LocalCache is also a persistent cache.) If the memory becomes full, Resin will use the cache entries that are on disk. For performance, commonly-used items will remain in memory.